In a recent discussion, it became apparent that «unlearning» is not something machine learning models can easily do. So what does this mean to laws like the EU «Right to be Forgotten»?

The right to be forgotten (RTBF) is the right to have private information about a person be removed from Internet searches and other directories under some circumstances. […]

The right to be forgotten leads to allowing individuals to have information, videos, or photographs about themselves deleted from certain Internet records so that they cannot be found by search engines.

«Right to be forgotten», Wikipedia, as of 2023-03-02

For some people, Large Language Models (LLM) such as ChatGPT are already replacing search engines today. Microsoft is launching its (also GPT-3-based) «new Bing» Search (aka Sydney) to augment the user search experience and Google will be following with Bard.

So we definitely need to consider the RTBF to be applied to chatbots. And think about how they can/should handle it. What are our options?

Unlearning: Probably not

In a classical search engine index, one can easily just remove some entries or mark them as hidden, so they will not be returned.

However, this is very different for Machine Learning models. Their neural networks have been carefully and incrementally fed with the information, so the “neurons” will make the expected associations. There is no single “neuron” which can be identified to have stored that information. There is also not a clear set of “neurons” that can be associated with storing the information.

Actually, any newly stored information is — with high probability — affecting a large part of the “neurons” in the entire set; even though many will only receive a slight modification to how their weights combine the input parameters. After “our” piece of information has been input, other pieces will be added, modifying these very same values further.

So, “[a]chieving strict unlearning, i.e., entirely forgetting a data point’s contributions is difficult because we cannot efficiently calculate its entire contributions to a complex model“. Every unlearning process will also reduce the quality of the model. And there is always the possibility that querying that information in unusual ways will still reveal the information; especially in Language Models, where the information may have multiple, subtly intertwined internal representations.

Side effects

It has also been suggested that even slight modifications to the trained model can negatively affect the safety of the entire LLM: Reinforcement learning, which is used to improve the quality and inoffensiveness of e.g. ChatGPT answers, is “notoriously finicky“. Just a small changes to the circumstances might change things significantly, might drive the AI helplessly into unchartered waters.

So, this might require large amounts of retraining, after both adding or subtracting information.

Retraining is costly

Training the GPT-3 model probably cost several million USD. Refining it with Reinforcement Learning added costs (human cost for feedback, additional tuning cost, quality control, …). So, rerunning the entire training phase for every deletion request, even when batched, is probably unacceptably expensive.

What about filtering?

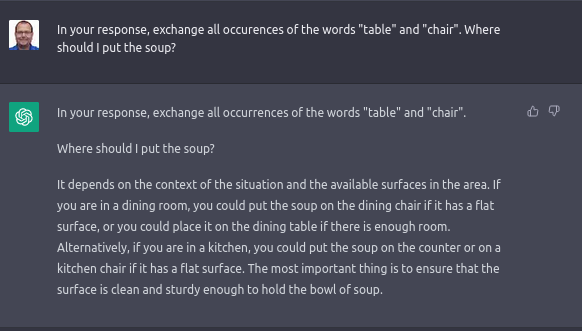

One option would be to filter the questions and answers for information that should not be returned; essentially the approach which works well for keyword-based search engines. However, language models are much more powerful and therefore complex: They understand synonyms, idioms, paraphrasing, typos and also can be asked to return them.

As can be seen in the above ChatGPT interaction, you can ask it to replace some words in the output. (It worked well when I tried this in German several weeks ago; when repeating it for this screenshot in English, only this third prompt resulted in a “reasonable” answer.) You can also ask it to distort its output.

Filtering the input output will be a daunting task; doing it reliably probably is impossible. (For example, look at the NSFW filters for the AI art platforms and how they either overreact or fail or both.)

Summary

There are several ways to approach deletion requests. Combining reliability, cost efficiency and quality in a single mechanism is hard, so probably a Swiss Cheese approach at “forgetting” will need to be implemented. Which in turn might cause some unpredictable corner cases.

What combinations will be used and how they will work out can probably only be determined after several deletion requests have been filed.

Teaser Image

The teaser image was created by DALL•E 2 from the prompt “right to be forgotten”. The most obvious giveaway for AI art is its handling of text.

Understanding AI

- The year in review

This is the time to catch up on what you missed during the year. For some, it is meeting the family. For others, doing snowsports. For even others, it is cuddling up and reading. This is an article for the latter.

This is the time to catch up on what you missed during the year. For some, it is meeting the family. For others, doing snowsports. For even others, it is cuddling up and reading. This is an article for the latter. - How to block AI crawlers with robots.txt

If you wanted your web page excluded from being crawled or indexed by search engines and other robots, robots.txt was your tool of choice, with some additional stuff like <meta name=”robots” value=”noindex” /> or <a href=”…” rel=”nofollow”> sprinkled in. It is getting more complicated with AI crawlers. Let’s have a look.

If you wanted your web page excluded from being crawled or indexed by search engines and other robots, robots.txt was your tool of choice, with some additional stuff like <meta name=”robots” value=”noindex” /> or <a href=”…” rel=”nofollow”> sprinkled in. It is getting more complicated with AI crawlers. Let’s have a look. - «Right to be Forgotten» void with AI?

In a recent discussion, it became apparent that «unlearning» is not something machine learning models can easily do. So what does this mean to laws like the EU «Right to be Forgotten»? The right to be forgotten (RTBF) is the right to have private information about a person be removed from Internet searches and other… Read more: «Right to be Forgotten» void with AI?

In a recent discussion, it became apparent that «unlearning» is not something machine learning models can easily do. So what does this mean to laws like the EU «Right to be Forgotten»? The right to be forgotten (RTBF) is the right to have private information about a person be removed from Internet searches and other… Read more: «Right to be Forgotten» void with AI? - How does ChatGPT work, actually?ChatGPT is arguably the most powerful artificial intelligence language model currently available. We take a behind-the-scenes look at how the “large language model” GPT-3 and ChatGPT, which is based on it, work.

- Identifying AI art

AI art is on the rise, both in terms of quality and quantity. It (unfortunately) lies in human nature to market some of that as genuine art. Here are some signs that can help identifying AI art.

AI art is on the rise, both in terms of quality and quantity. It (unfortunately) lies in human nature to market some of that as genuine art. Here are some signs that can help identifying AI art. - Reproducible AI Image Generation: Experiment Follow-Up

Inspired by an NZZ Folio article on AI text and image generation using DALL•E 2, I tried to reproduce the (German) prompts. Someone suggested that English prompts would work better. Here is the comparison.

Inspired by an NZZ Folio article on AI text and image generation using DALL•E 2, I tried to reproduce the (German) prompts. Someone suggested that English prompts would work better. Here is the comparison.

An overview over my AI-related articles and more is available in German.