If you wanted your web page excluded from being crawled or indexed by search engines and other robots, robots.txt was your tool of choice, with some additional stuff like <meta name="robots" value="noindex" /> or <a href="…" rel="nofollow"> sprinkled in.

It is getting more complicated with AI crawlers. Let’s have a look.

Traditional functions

- One of the first goals of

robots.txtwas to prevent web crawlers from hogging the bandwidth and compute power of a web site. Especially if the site contained dynamically generated context, possibly infinite. - Another important goal was to prevent pages or their content from being found using search engines. There, the above-mentioned

<meta>and<a rel>tags came in handy as well. - A non-goal was to use it for access control, even though it was frequently misunderstood to be useful for that purpose.

New challenges

Changes over time

The original controls focused on services which would re-inspect the robots.txt file and the web page on a regular basis and update it accordingly.

Therefore, it didn’t work well for archive sites: Should they delete old contents on policy changes or keep them archived? This is even more true for AI training material, as deleting training material from existing models is very costly.

Commercialization

Even in the pre-AI age, some sites desired a means to prevent commercial services from monetizing their content. However, both the number of such services and their opponents were small.

With the advent of AI scraping, the problem became more prominent, resulting e.g. in changes in the EU copyright law, allowing web site owners to specify whether they want their site crawled for text and data mining.

As a result, the the Text and Data Mining Reservation Protocol Community Group of the World Wide Web Consortium proposed a protocol to allow fine-grained indication of which content on a web site was free to crawl and which parts would require (financial or other) agreements. The proposal includes options for a global policy document or returning headers (or <meta> tags) with each response.

Also, Google started their competing initiative to augment robots.txt a few days ago.

None of these new features are implemented yet, neither in a web servers or CMS, nor by crawlers. So we need workarounds.

[Added 2023-08-31] Another upcoming “standard” is ai.txt, modeled after robots.txt. It distinguishes among media types and tries to fulfill the EU TDM directive. In a web search today, I did not find crawler support for it, either.

[Added 2023-10-06] Yet another hopeful “standard” is the “NoAI, NoImageAI” meta-tag proposal. Probably ignored by crawlers as well for now.

AI crawlers

Unfortunately, the robots.txt database stopped receiving updates around 2011. So, here is an attempt at keeping a list of robots related to AI crawling.

| Organization | Bot | Notes |

|---|---|---|

| Common Crawl | CCbot | Used for many purposes. |

| OpenAI GPT | Commonly listed as their crawler. However, I could not find any documentation on their site and no instances in my server logs. | |

| GPTBot | The crawler used for further refinement. [Added 2023-08-09] | |

| ChatGPT-User | Used by their plugins. | |

| Google Bard | Google-Extended | No separate crawl for Bard. But normal GoogleBot checks for robots.txt rules listing Google-Extended (currently only documented in English). [Added 2023-09-30] |

| Meta AI | — | No information for LLaMA. |

| Meta | FacebookBot | To “improve language models for our speech recognition technology” (relationship to LlaMa unclear). [Added 2023-09-30] |

| Webz.io | OmgiliBot | Used for several purposes, apparently also selling crawled data to LLM companies. [Added 2023-09-30] |

| Anthropic | anthropic-ai | Seen active in the wild, behavior (and whether it respects robots.txt) unclear [Added 2023-12-31, unconfirmed] |

| Cohere | cohere-ai | Seen active in the wild, behavior (and whether it respects robots.txt) unclear [Added 2023-12-31, unconfirmed] |

Please let me know when additional information becomes available.

An unknown entity calling itself “Bit Flip LLC” (no other identifying information found anywhere), is maintaining an interactive list at DarkVisitors.com. Looks good, but leaves a bad taste. Use at your own judgment. [Added 2024-04-14]

Control comparison

Search engines and social networks support quite a bit of control over what is indexed and how it is used/presented.

- Prevent crawling by

robots.txt, HTML tags, and paywalls. - Define preview information by HTML tags, Open Graph, Twitter Cards, …

- Define preview presentation using oEmbed.

For use of AI context, most of this is lacking and “move fast and break things” is still the motto. Giving users fine-grained control over how their content is used would help with the discussions.

Even though the users in the end might decide they actually do want to have (most or all) of their context indexed for AI and other text processing…

Example robots.txt

[Added 2023-09-30] Here is a possible /robots.txt file for your web site, with comments on when to enable:

# Used for many other (non-commercial) purposes as well

User-agent: CCBot

Disallow: /

# For new training only

User-agent: GPTBot

Disallow: /

# Not for training, only for user requests

User-agent: ChatGPT-User

Disallow: /

# Marker for disabling Bard and Vertex AI

User-agent: Google-Extended

Disallow: /

# Speech synthesis only?

User-agent: FacebookBot

Disallow: /

# Multi-purpose, commercial uses; including LLMs

User-agent: Omgilibot

Disallow: /Poisoning [Added 2023-11-04]

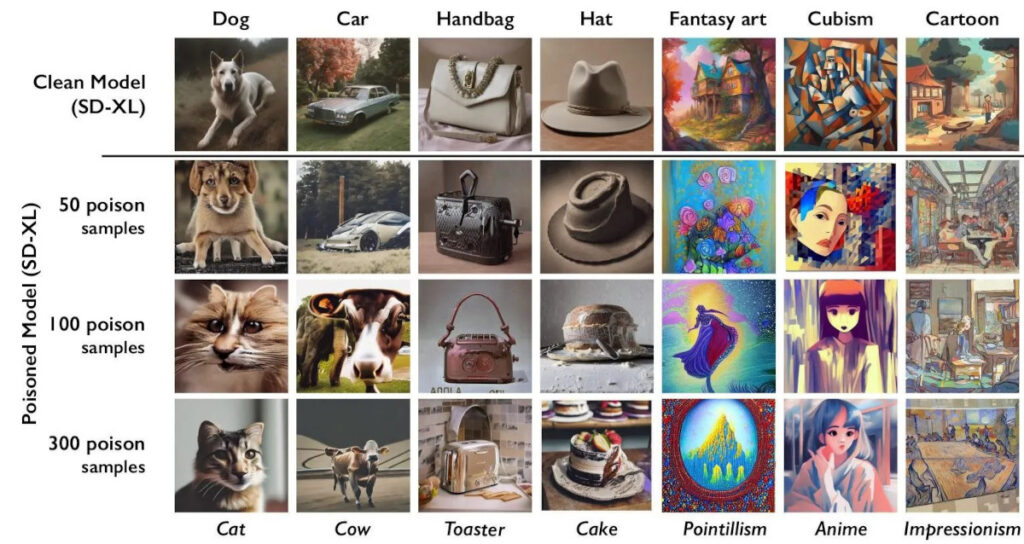

A different, more aggressive approach, is to start «poisoning» the AI models; something currently only supported for images. The basic idea is to use adversary images, that will be misclassified when ingested into the AI training and will therefore try and disrupt the training data and the resulting model.

It works by subtly changing the image, imperceptible to the human. The result is, however, that e.g. a cat is misclassified as a dog, when training. If enough bad training data is ingested into the model, part or all of the wrongly trained features will be used. In our case, asking the AI image generator to produce a dog may result in the generated dog to look more like a cat.

The “Nightshade” tool that is supposed to be released soon, which has this capability, is an extension of the current “Glaze” tool, which only results in image style misclassification.

Judge for yourself whether this disruptive and potentially damaging approach aligns with your ethical values before using it.

References

- Neil Clarke: Block the Bots that Feed “AI” Models by Scraping Your Web Site, 2023-08-23. [Added 2023-09-30]

- Benj Edwards: University of Chicago researchers seek to “poison” AI art generators with Nightshade, 2023-10-25, Ars Technica. [Added 2023-11-04]

- The Glaze Team: What is Glaze?, 2023. [Added 2023-11-04]

- Didier J. Mary: Blocquer les AI bots, 2023. [Added 2023-12-31]

- Richard Fletcher: How many news websites block AI crawlers?, Reuters Institute, 2024-02-22. [Added 2024-09-27]

One response to “How to block AI crawlers with robots.txt”

[…] How to block AI crawlers with robots.txt […]