AI art is on the rise, both in terms of quality and quantity. It (unfortunately) lies in human nature to market some of that as genuine art. Here are some signs that can help identifying AI art.

The German 🇩🇪 translation is available as «Identifikation von KI-Kunst».

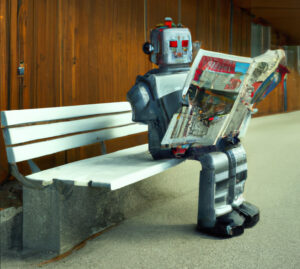

Tools like DALL•E 2, MidJourney, or StableDiffusion make it easy to create good-looking images. For example, the article’s teaser image was cropped from what DALL•E 2 returned when I asked it for something representing “inspecting ai art with a magnifying glass”.

They look pretty convincing, no? How can we tell they were not made by a human artist? Here are some indications. Of course, generative AI will become better over time and also human AI operators trying to “sell” the AI works as theirs will try to obfuscate some of these characteristics.

Anyway, here they are, mostly illustrated through examples from the “reproducible AI image generation” mini-series (part 1 in German, part 2 in English). As these are images which do not try to hide their AI origin (neither on the prompt level nor on the post-processing level), they are good for studying.

This post was inspired by an (ephemeral and gone) post by human digital artist Neobscura [NSFW]. I am grateful for their inspiration.

Watermarks

The most obvious (and easy to remove) are watermarks embedded by postprocessing in the AI service. For example, DALL•E 2 embeds the following at the lower right hand corner (taken from the teaser image):

Cropping the image or manually reconstructing what might be below are just too easy. So don’t expect a “forger” to leave that in.

[Added 2023-09-24] Non-visual watermarks may be included. For example, DALL•E 2 includes two custom EXIF metadata fields, containing “OpenAI--ImageGeneration--generation-<random-looking string>” and “Made with OpenAI Labs“, respectively. However, they are likely to be removed by accident or on purpose. (Take the images here and here as examples, if you want to find them. Clicking on the thumbnails leads to the original PNG files with that info.)

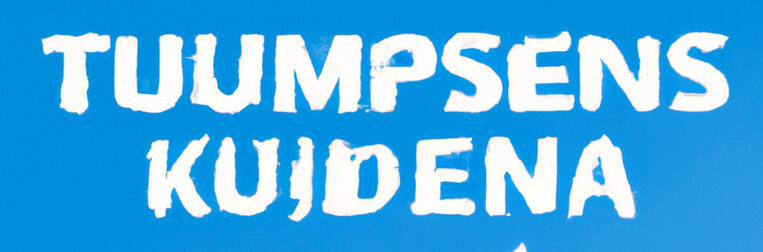

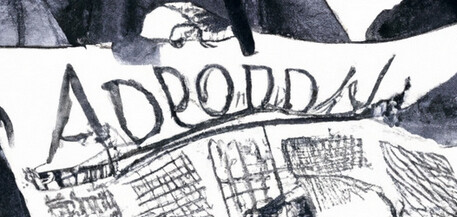

Text

In the “inspecting AI art” images, the text is amazingly clear (presumably, it tried to make a combination word including “ai” and “art”). However, often, text is often very crude at best. Even when the characters are recognizable, the outline is still often jagged.

Depending on the context, this may also be easily changed. And probably AI art platforms will learn to better deal with text in the future.

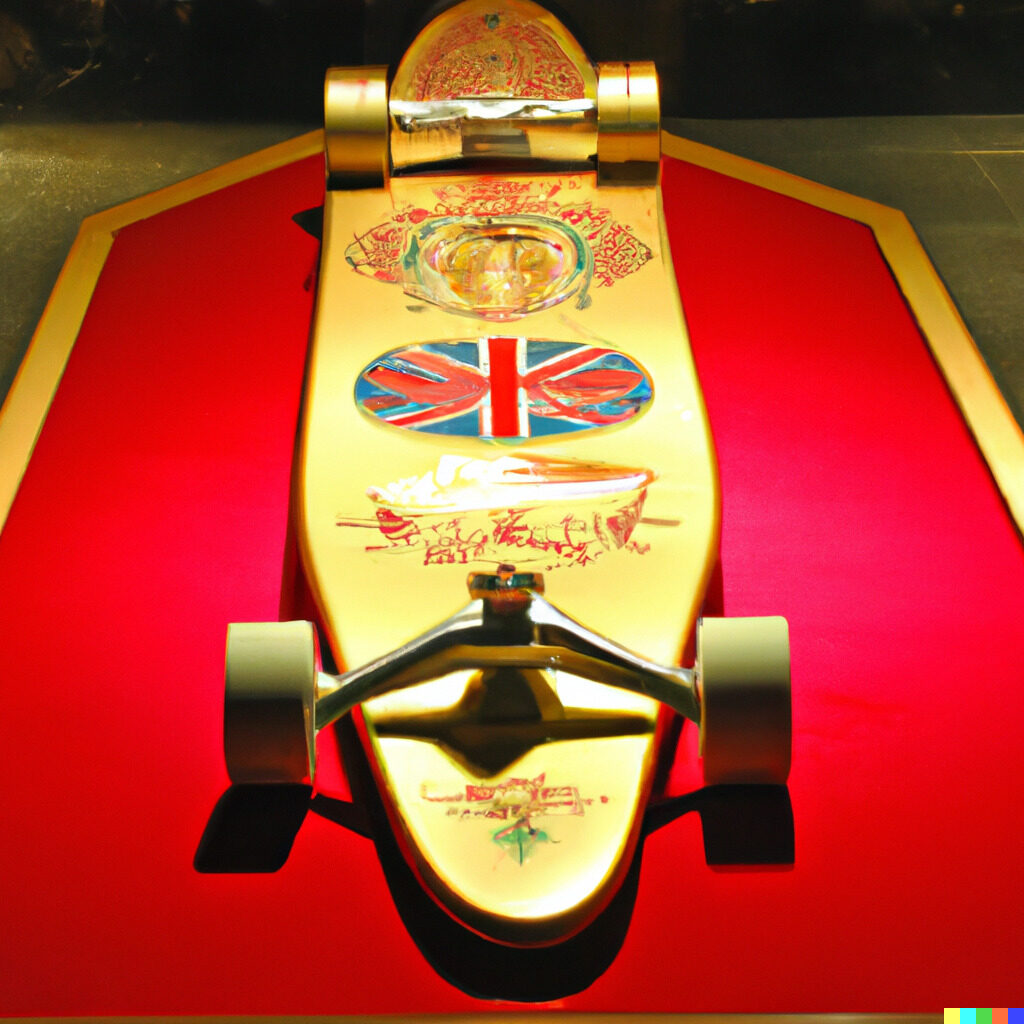

Unsolicited noise or detail

A generated image is essentially based on a collage from different sources. So there will generally be different styles involved, even though that can be partly controlled through the prompt. When several parts of the generated image are reproduced from source images with different characteristics, some of these characteristics will be inherited by the resulting region, where they will betray their AI origin: resolution, smoothness, presence/hardness/resolution of texture, and more.

Whenever there are unmotivated changes in the fine structure of the image, this is a good indication for AI art. Some of that can be obscured by smoothing or pixelation filters.

Micro level not matching macro level

Zooming in on details may reveal that an item is not what it is supposed to be: Trees not being made of leaves of the right size or kind, shingles on the roof actually being bricks, … As in the “unsolicited noise or detail” above, instead of a pure generation of a coherent picture, the AI is essentially a copy-paste operation on steroids. With all the problems copy-paste entails.

These will probably be hard to (semi-)automatically remove and are a good indication that something is wrong.

Lack of symmetry

Things which are symmetric in the real world are not necessarily so in AI generated art.

Fixing this probably required manual editing or inpainting. Or future image generators which will handle this better.

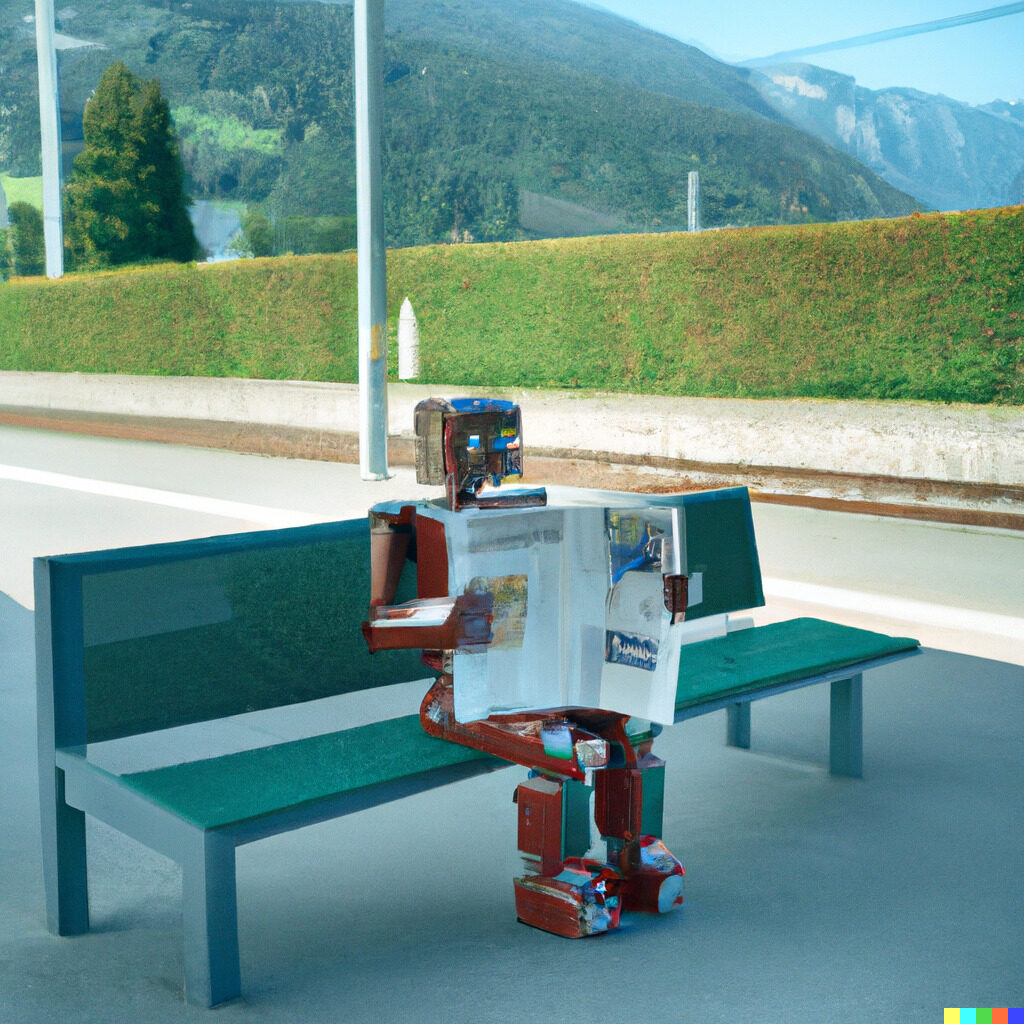

Impossible 3D geometry

Current AI art generators do not really obey 3D geometry. Therefore, we have some weird interactions. Especially benches and mechanisms seem to be hard to reproduce, according to my experience.

These problems might be fixable with additional prompting. Already some generative AI are looking into mapping the images onto 3D surfaces, so that will become better in the future. [Added 2023-01-26:] Already today, 3D models can be generated from an AI prompt.

Shadows and reflections

These are known to help identifying manipulated photos. And remain friends when identifying AI generated art.

These will probably improve when real 3D models are used.

Strange eyes and hair

Eyes not looking in the same direction or strange hair (including direction) are a good indication for AI-created content. Especially animals have lower quality eye rendering, presumably due to the lesser amount of training data.

(Human) eyes and faces will probably receive plenty of attention in the near future, so expect that to become harder to identify.

Wrong number of limbs/fingers

Like in a kid (or comic) drawing, the number of limbs or fingers may differ from what we are used to see daily. Walking quadruped animals seen from the side are reported to have innovative leg counts, but none of them were available in the sample images I selected to draw from.

This is probably easy to fix using inpainting or avoiding having interfering anything drawn near the limbs or fingers. Again, improved training will probably improve these as well.

[Added 2023-02-04:] Hands look weird, partly due to them being rarely the focus of images and them rarely not holding onto something.

Summary

When looking closely at the image, there are plenty of opportunities to identify things gone wrong. But be aware that false positives may happen (i.e., the image was created by a real human artist who just happened not the be careful enough); and so do false negatives (even though you find none of these artifacts, it may still be AI art, just better than the images that I used here; or post-processed with inpainting or image editing software).

Further reading

- Sarah Shaffi: ‘It’s the opposite of art’: why illustrators are furious about AI, The Guardian, 2023-01-23.

- Shira Ovide: How to spot the Trump and Pope AI fakes, The Washington Post, 2023-03-31. [Added 2023-12-17]

- Image manipulation, colloquially known as “photoshopping”, will sometimes result in similar patterns. A web search for “identifying image manipulation”, “photo forensics” or similar will result in a endless stream of information and tools on the topic.

Artificial Intelligence

- The year in reviewThis is the time to catch up on what you missed during the year. For some, it is meeting the family. For others, doing snowsports. For even others, it is… Read more: The year in review

- How to block AI crawlers with robots.txtIf you wanted your web page excluded from being crawled or indexed by search engines and other robots, robots.txt was your tool of choice, with some additional stuff like <meta… Read more: How to block AI crawlers with robots.txt

- «Right to be Forgotten» void with AI?In a recent discussion, it became apparent that «unlearning» is not something machine learning models can easily do. So what does this mean to laws like the EU «Right to… Read more: «Right to be Forgotten» void with AI?

- How does ChatGPT work, actually?ChatGPT is arguably the most powerful artificial intelligence language model currently available. We take a behind-the-scenes look at how the “large language model” GPT-3 and ChatGPT, which is based on… Read more: How does ChatGPT work, actually?

- Identifying AI artAI art is on the rise, both in terms of quality and quantity. It (unfortunately) lies in human nature to market some of that as genuine art. Here are some… Read more: Identifying AI art

- Reproducible AI Image Generation: Experiment Follow-UpInspired by an NZZ Folio article on AI text and image generation using DALL•E 2, I tried to reproduce the (German) prompts. Someone suggested that English prompts would work better. Here is the comparison.